VITS是什么?

VITS(Variational Inference with adversarial learning for end-to-end Text-to-Speech)是一种基于深度学习的端到端语音合成技术。相较于传统TTS系统,它无需复杂的声学模型和声码器,直接通过文本生成自然流畅的语音。

其核心在于:

- 变分自编码器(VAE)结构

- 对抗训练机制

- 蒙特卡洛flow-based时长预测

其内部由3个模型组成,分别是GAN、VAE和Flow。

安装必备工具

下面先安装相应的工具:

# 基础环境 conda create -n vits python=3.8 conda activate vits pip install torch==1.10.0+cu113 torchvision==0.11.1+cu113 torchaudio==0.10.0 -f https://download.pytorch.org/whl/cu113/torch_stable.html # 核心依赖 pip install numpy scipy matplotlib pandas pip install tensorboard librosa unidecode inflect

获取代码仓库

这里没有选择官方的仓库,而是选择如下微调的仓库代码,因为其支持中文模型:

git clone https://github.com/Plachtaa/VITS-fast-fine-tuning.git pip install -r requirements.txt

下载原神角色模型

访问【VITS-genshin】下载相关的模型,密码是8243。将整个目录下载下来放在源码目录下。

合成你的第一段语音

在项目仓库目录下新建1个脚本,其中的代码如下

import os

import torch

import utils

import commons

import logging

import soundfile as sf

from text import text_to_sequence

from models import SynthesizerTrn

from torch import no_grad, LongTensor

logger = logging.getLogger("jieba")

logger.setLevel(logging.WARNING)

limitation = False

hps_ms = utils.get_hparams_from_file('./configs/uma_trilingual.json')

device = torch.device("cpu")

net_g_ms = SynthesizerTrn(

len(hps_ms.symbols),

hps_ms.data.filter_length // 2 + 1,

hps_ms.train.segment_size // hps_ms.data.hop_length,

n_speakers=hps_ms.data.n_speakers,

**hps_ms.model)

_ = net_g_ms.eval().to(device)

model, optimizer, learning_rate, epochs = utils.load_checkpoint("./pretrained_models/G_trilingual.pth", net_g_ms, None)

def get_text(text, hps):

text_norm = text_to_sequence(text, hps.symbols, hps.data.text_cleaners)

if hps.data.add_blank:

text_norm = commons.intersperse(text_norm, 0)

text_norm = LongTensor(text_norm)

return text_norm

def vits(text, language, speaker_id, noise_scale, noise_scale_w, length_scale):

if not len(text):

return "输入文本不能为空!", None, None

text = text.replace('\n', ' ').replace('\r', '').replace(" ", "")

length = len(text)

if length > 100:

return "输入文字过长!", None, None

if language == 0:

text = "[ZH]{}[ZH]".format(text)

elif language == 1:

text = "[JA]{}[JA]".format(text)

stn_tst = get_text(text, hps_ms)

with no_grad():

x_tst = stn_tst.unsqueeze(0).to(device)

x_tst_lengths = LongTensor([stn_tst.size(0)]).to(device)

speaker_id = LongTensor([speaker_id]).to(device)

audio = net_g_ms.infer(x_tst, x_tst_lengths, sid=speaker_id, noise_scale=noise_scale, noise_scale_w=noise_scale_w,

length_scale=length_scale)[0][0, 0].data.cpu().float().numpy()

return 22050, audio

if __name__ == "__main__":

speakers = {

"特别周": 0, "无声铃鹿": 1, "东海帝王": 2, "丸善斯基": 3, "富士奇迹": 4, "小栗帽": 5, "黄金船": 6, "伏特加": 7, "大和赤骥": 8, "大树快车": 9,

"草上飞": 10, "菱亚马逊": 11, "目白麦昆": 12, "神鹰": 13, "好歌剧": 14, "成田白仁": 15, "鲁道夫象征": 16, "气槽": 17, "爱丽数码": 18, "青云天空": 19,

"玉藻十字": 20, "美妙姿势": 21, "琵琶晨光": 22, "重炮": 23, "曼城茶座": 24, "美普波旁": 25, "目白雷恩": 26, "雪之美人": 28, "米浴": 29, "艾尼斯风神": 30,

"爱丽速子": 31, "爱慕织姬": 32, "稻荷一": 33, "胜利奖券": 34, "空中神宫": 35, "荣进闪耀": 36, "真机伶": 37, "川上公主": 38, "黄金城市": 39, "樱花进王": 40,

"采珠": 41, "新光风": 42, "东商变革": 43, "超级小溪": 44, "醒目飞鹰": 45, "荒漠英雄": 46, "东瀛佐敦": 47, "中山庆典": 48, "成田大进": 49, "西野花": 50,

"春乌拉拉": 51, "青竹回忆": 52, "待兼福来": 55, "名将怒涛": 57, "目白多伯": 58, "优秀素质": 59, "帝王光环": 60, "待兼诗歌剧": 61, "生野狄杜斯": 62,

"目白善信": 63, "大拓太阳神": 64, "双涡轮": 65, "里见光钻": 66, "北部玄驹": 67, "樱花千代王": 68, "天狼星象征": 69, "目白阿尔丹": 70, "八重无敌": 71,

"鹤丸刚志": 72, "目白光明": 73, "樱花桂冠": 74, "成田路": 75, "也文摄辉": 76, "真弓快车": 80, "骏川手纲": 81, "小林历奇": 83, "奇锐骏": 85, "秋川理事长": 86,

"綾地": 87, "因幡": 88, "椎葉": 89, "仮屋": 90, "戸隠": 91, "九条裟罗": 92, "芭芭拉": 93, "派蒙": 94, "荒泷一斗": 96, "早柚": 97, "香菱": 98, "神里绫华": 99,

"重云": 100, "流浪者": 102, "优菈": 103, "凝光": 105, "钟离": 106, "雷电将军": 107, "枫原万叶": 108, "赛诺": 109, "诺艾尔": 112, "八重神子": 113, "凯亚": 114,

"魈": 115, "托马": 116, "可莉": 117, "迪卢克": 120, "夜兰": 121, "鹿野院平藏": 123, "辛焱": 124, "丽莎": 125, "云堇": 126, "坎蒂丝": 127, "罗莎莉亚": 128,

"北斗": 129, "珊瑚宫心海": 132, "烟绯": 133, "久岐忍": 136, "宵宫": 139, "安柏": 143, "迪奥娜": 144, "班尼特": 146, "雷泽": 147, "阿贝多": 151, "温迪": 152,

"空": 153, "神里绫人": 154, "琴": 155, "艾尔海森": 156, "莫娜": 157, "妮露": 159, "胡桃": 160, "甘雨": 161, "纳西妲": 162, "刻晴": 165, "荧": 169, "埃洛伊": 179,

"柯莱": 182, "多莉": 184, "提纳里": 186, "砂糖": 188, "行秋": 190, "奥兹": 193, "五郎": 198, "达达利亚": 202, "七七": 207, "申鹤": 217, "莱依拉": 228, "菲谢尔": 230

}

speed = 1

output_dir = "output"

if not os.path.exists(output_dir):

os.makedirs(output_dir)

for k in speakers:

id = speakers[k]

print("Speaker:",k)

sr, audio = vits('你好,我是玛丽', 0, torch.tensor([id]), 0.1, 0.668, 1.0/speed)

filename = os.path.join(output_dir, "{}.wav".format(k))

sf.write(filename, audio, samplerate=sr)

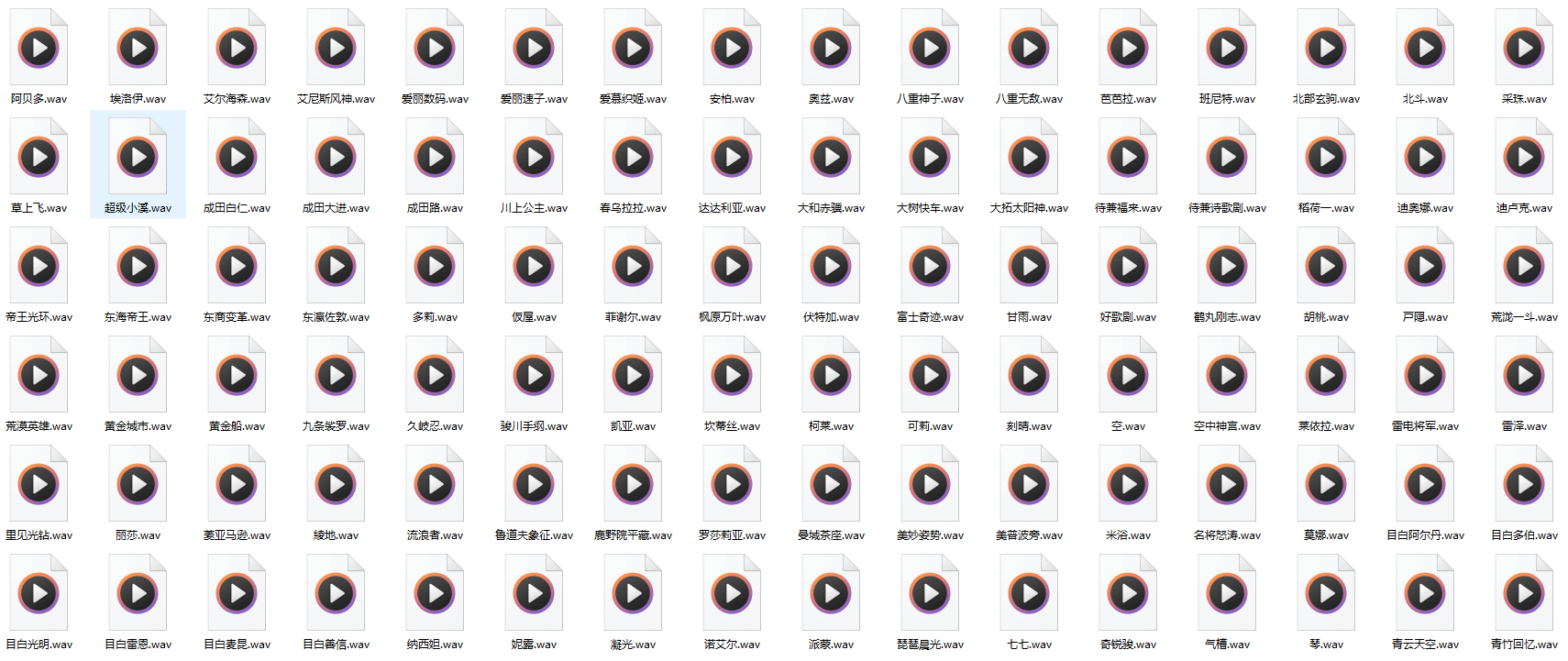

运行即可看到如下生成的效果:

最后来听下效果:

相对来说,其合成的语音还是比较真实的。但是限于原神角色的问题,如果想更为正式的场景,还是需要自己微调。

如果喜欢这篇文章或对您有帮助,可以:[☕] 请我喝杯咖啡 | [💓] 小额赞助